Updated July 27, 2021 at 12:48 PM ET

Instagram is introducing new safety settings for young users: It's making new accounts private by default for kids under 16, blocking some adults from interacting with teens on its platform, and restricting how advertisers can target teenagers.

The changes come as the Facebook-owned photo-sharing app is under pressure from lawmakers, regulators, parents and child-safety advocates worried about the impact of social media on kids' safety, privacy and mental health.

"There's no magic switch that makes people suddenly aware about how to use the internet," Karina Newton, Instagram's head of public policy, told NPR. She said the changes announced on Tuesday are aimed at creating "age-appropriate experiences" and helping younger users navigate the social network.

"We want to keep young people safe, we want to give them good experiences, and we want to help teach them, as they use our platforms, to develop healthy and quality habits when they're using the Internet and apps and social media," she said.

Like other apps, Instagram bans kids under 13 from its platform, to comply with federal privacy law. But critics say the law has left older teens exposed. Some members of Congress have called for expanding privacy protections for children through age 15.

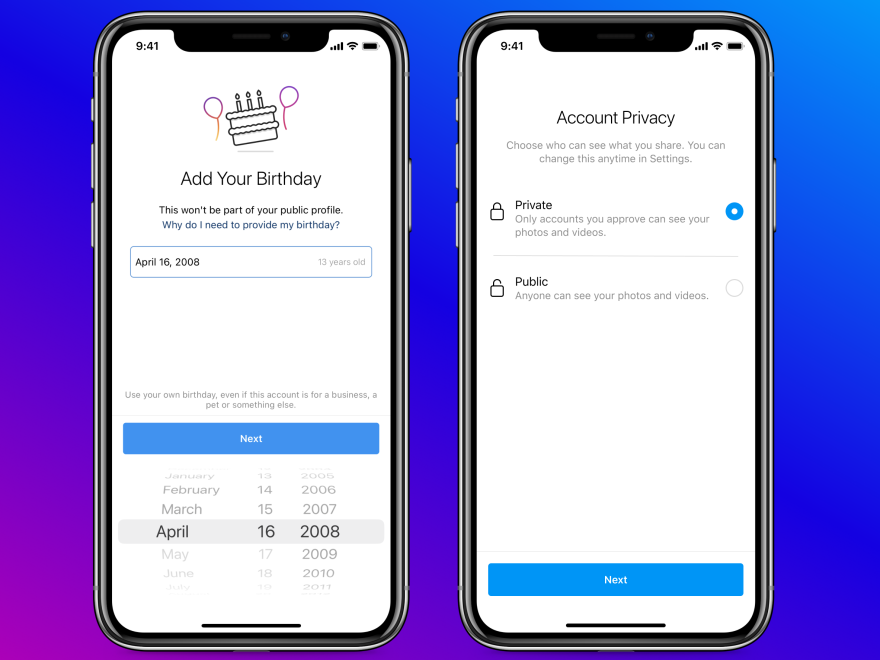

Starting this week, when kids under 16 join Instagram, their accounts will be made private automatically, meaning their posts will only be visible to people they allow to follow them. (The default private setting will apply to people under 18 in some countries.)

Teens who already have public Instagram accounts will see notifications about the benefits of private accounts and how to switch. The company said in testing, about 80% of young people chose to keep the private setting when signing up.

Instagram is also taking steps to prevent what it calls "unwanted contact from adults." It says adults who, while not breaking Instagram's rules, have shown "potentially suspicious behavior" — such as if they've been blocked or reported by young people — will have limited ability to interact with and follow teens.

For example, they won't see teenagers' posts among the recommendations in Instagram's Explore and Reels sections, and Instagram won't suggest they follow teens' accounts. If these adults search for specific teens by user name, they won't be able to follow them, and they'll be blocked from commenting on teens' posts.

"We want to ensure that teens have an extra barrier of protection around them out of an abundance of caution," Newton said.

That builds on changes Instagram made in March, when it banned adults from sending private messages to teens who don't follow them.

Facebook is also changing the rules for advertisers on Instagram as well as its namesake app and its Messenger app. Starting in a few weeks, they will be able to target people under 18 with ads based only on age, gender and location, but not other information the company tracks, such as users' interests or habits on its own apps or other apps that share data with the company.

Facebook and Instagram also say they are working on better methods of verifying users' ages, so they can determine when policies for teens should apply, and do a better job of keeping kids under 13 off the apps.

Instagram has come under fire in the past over how it handles young users. It started asking users for their birth dates only in 2019. Before then, it simply asked them to confirm whether or not they were at least 13.

Newton says Facebook and Instagram already use artificial intelligence to scan profiles for signals that suggest whether a user is older or younger than 18. That includes looking at what people say in comments wishing users a happy birthday. Now they are expanding that technology to determine the age of younger users.

She acknowledged that determining age is a "complex challenge" and that Instagram has to balance questions of privacy when using technology to estimate users' age.

"This is an area that isn't foolproof," she said. Facebook and Instagram are in discussions with other tech groups, including the makers of internet browsers and smartphone operating systems, on sharing information "in privacy-preserving ways" to help determine if a user is old enough for an online account, she said.

The changes "appear to be a step in the right direction," said Josh Golin, executive director of the children's advocacy nonprofit Fairplay, formerly called the Campaign for a Commercial-Free Childhood, which is one of the groups pushing to extend legal privacy protections for teens.

"There has been such a groundswell to do more to protect teen safety, but also around manipulative behavioral advertising," he told NPR. "So it's good that they're responding finally to that pressure."

But Golin said his group would continue pushing for tighter regulation of how tech companies can use kids' data.

"The idea that you shouldn't be allowed to use a child's data in a way that's harmful to them is something that we absolutely need to see," he said. "In no other context that I'm aware of do we treat 13-year-olds like they're adults."

As scrutiny of powerful technology companies has grown in Washington, their impact on kids has emerged as a bipartisan area of concern.

"Your platforms are my biggest fear as a parent," Rep. Cathy McMorris Rodgers, R-Wash., told the CEOs of Facebook, Google and Twitter at a congressional hearing in March. "It's a battle for their development, a battle for their mental health, and ultimately a battle for their safety," she said, pointing to research linking social media to depression among teens.

That hearing came shortly after Instagram sparked a new round of controversy with news it is working on a version of the app for kids under 13. The project has drawn opposition from child safety groups, members of congress, and 44 attorneys general, who urged Facebook to scrap the idea entirely.

But Facebook CEO Mark Zuckerberg has defended the idea, saying that under-13s are already using Instagram, so it would be better to provide them a dedicated version.

Newton echoed that point, saying that young people are online and, despite age limits, "a lot of times they're using products that weren't built or designed for them."

On Tuesday, Pavni Diwanji, Facebook's vice president of youth products, wrote in a blog post that the company is continuing to develop "a new Instagram experience for tweens" that would be managed by parents or guardians.

"We believe that encouraging them to use an experience that is age appropriate and managed by parents is the right path," Diwanji said.

Editor's note: Facebook and Google are among NPR's financial supporters.

Copyright 2021 NPR. To see more, visit https://www.npr.org.