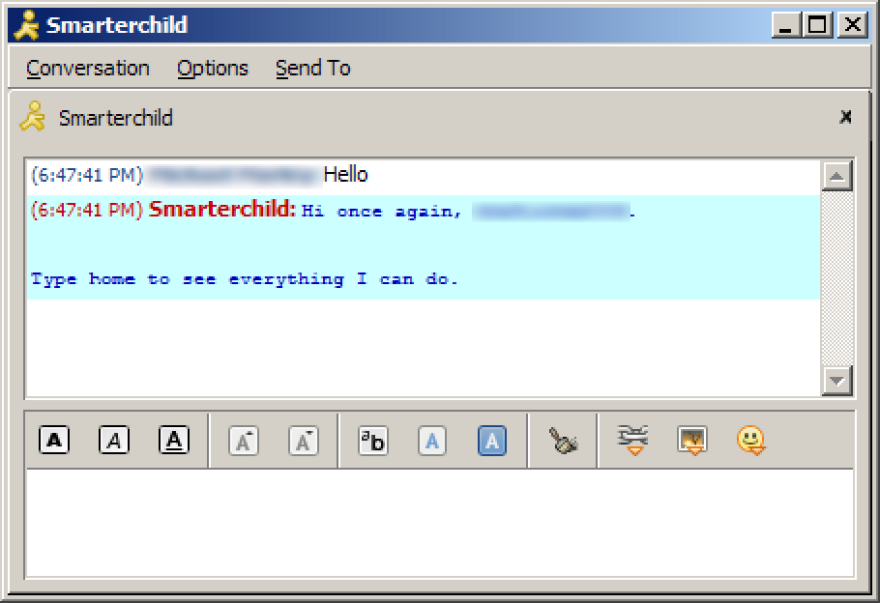

In the year 2000, logging onto the Internet usually meant sitting down at a monitor connected to a dial-up modem, a bunch of beeps and clicks, and a "You've got mail!" notification. In those days, AOL Instant Messenger was the Internet's favorite pastime, and the king of AIM was SmarterChild, a chatbot that lived in your buddy list.

A chatbot is a computer program designed to simulate human conversation, and SmarterChild was one of the first chatbots the public ever saw. The idea was that you would ask SmarterChild a question — "Who won the Mets game last night?" or "Where did the Dow close today?" — then the program would scour the Internet and, within seconds, respond with the answer. The company that built SmarterChild, a startup called ActiveBuddy, thought it could make money by building custom bots for big companies and made SmarterChild as a test case.

And people did use SmarterChild — a lot. At its height, SmarterChild chatted with 250,000 people a day.

Responding like a human

But most of those people weren't asking SmarterChild about sports or stocks. They were just chitchatting with it, about nothing in particular — like how you'd chat with a friend. "Our goal was to make a bot people would actually use, and to do that we had to make the best friend on the Internet," says Robert Hoffer, one of its creators.

By today's standards, SmarterChild wasn't all that fancy a technology, and many of its users were teenagers trying to trick the bot into saying something subversive or stupid. (Its designers called this "Captain Kirk-ing the bot," after the Star Trek captain with a knack for outwitting his machine adversaries.) But still, SmarterChild's users formed a unique kind of bond with it.

Fast forward 17 years and ... well, you know what the world is like now. There are computers in our pockets, our cars, our refrigerators — and we talk to them, just like people talked to SmarterChild two decades ago: "Siri, what's the weather going to be like today?" "Alexa, turn off the lights."

The chatbot mania is led by personal assistants like Apple's Siri, Amazon's Alexa or Google Home, followed by a fleet of single-use bots from smaller startups. You can text message to find out the balance of your checking account. will help you and your office mates coordinate group purchases. Howdy is billed as your "digital coworker" who automates repetitive tasks like collecting lunch orders.

Still, few of these bots seem to have captured our imaginations quite like SmarterChild did. Much of the current chatting tech is still missing something SmarterChild had plenty of: personality.

Where Siri and Alexa tend to be helpful and polite, SmarterChild was sarcastic and snarky. Tell Siri you hate her, and she'll say something benign and frustratingly accommodating: "Well ... I'm still here for you." Talk smack to SmarterChild? "SmarterChild would cut you off from information and would not do anything until you apologized," Hoffer says. "If you offended SmarterChild you had hell to pay." That's a bot responding like a human would.

"Upbeat but not so upbeat"

Most designers agree bots need to have something resembling a human personality.

Chatbots work best when people interact with them like they would a human, so the machine can apply the conventions of conversation and doesn't confront the human with unnatural syntax. A personality encourages that kind of natural back-and-forth. You wouldn't talk to your toaster the way you talk to your friend — unless your toaster had a great sense of humor.

"With chatbots, so much of the interface has been stripped away that the user experience really depends on the dialogue," says Ari Zilnik, a UX (user-experience) designer and consultant whose latest project was an AI bot for a text-message game called . "The experience of interacting with a chatbot is very similar to interacting with a human, so we sense a personality in that conversation."

So how do you build a personality from scratch? Designers often refer to the "Big Five" personality traits, which come from the five-factor model that many psychologists use to describe human personality. According to the theory, every personality has five dimensions: agreeableness, conscientiousness, neuroticism, extraversion and openness.

Bot designers decide where their bot stands on each of those five traits, and then write dialogue to express that personality through language. Basically, they're giving the bots a database filled with snippets of natural language to use when talking with humans.

"My daughter has known the difference between right and wrong since she was 3, and until you have a bot on the same level as a 3-year-old, you're not going to have a popular bot."

When they were working on the Emoji Salad, Zilnik and his team spent time playing the game with Zilnik standing in for the chatbot to see where the conversation went and plan the pieces of dialogue they would need to write for the bot.

The dialogue is usually written by bot-focused UX designers — often, creative types more interested in human relationships than writing code (though there's some of that too).

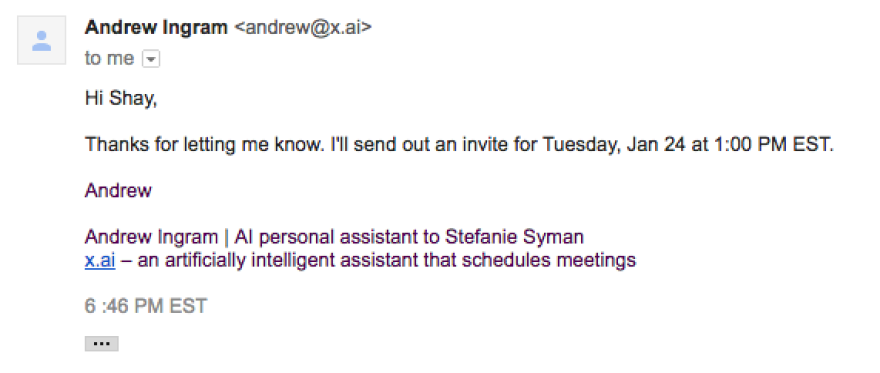

For example, at x.ai, a company that makes an artificially intelligent bot that schedules meetings via email, the process is handled by Anna Kelsey and Diane Kim, who have backgrounds in theater and cognitive science, respectively.

At x.ai, Kelsey and Kim approximated the "Big Five" traits by modeling their bots, named Amy and Andrew, after the ideal personal assistant: "Polite but not excessively formal, easy to talk to, super clear about everything," says Kelsey. "They're upbeat but not so upbeat that there are exclamation points around everything."

Personality by design

After defining a bot's personality comes an even higher hurdle: actually helping the bot express that personality through language.

Since bots and humans interact almost exclusively through either text or spoken language, designers work to express personality with that limited palette. (There are exceptions. For example, the banking app Digit sends the occasional GIF of a pop star "making it rain" cash to celebrate the high balance in a user's savings account.)

As Kelsey and Kim wrote dialogue for Amy and Andrew to use with users, they tried to find ways to give the bots a human touch. Through trial and error, they quickly learned that even subtle changes in wording can have a big impact.

That's why, if Andrew writes to cancel a meeting, his tone softens. If he's rescheduling for a second time, he apologizes: "I'm sorry." If he has to reschedule a third time it's, "I'm so sorry."

Even though the tech world is obsessed with testing and debugging, the testing process for the team developing bot personalities is different. "It's a lot more touchy-feely," Kelsey says. "We're looking at it and asking ourselves, 'Does this feel right?' "

When I interviewed Kelsey and Kim, we scheduled our call using Andrew the bot as an intermediary. "Hi Shay," he wrote. "Happy to get something on the calendar." He gave me a few time slots that worked for the x.ai team, I chose one, and then, even though I knew that I was talking to a piece of software, I couldn't help myself. I signed off with a friendly "Looking forward to it!"

But would millions of people spend hours chatting with a bot that's perpetually "happy to get something on the calendar?" That's not really the goal for the x.ai team, but you have to wonder whether the humans will ever really connect with the growing army of uber-polite and relentlessly nonconfrontational bots.

"In order for your bot to be popular, it has to have a personality, and in order for it to have a personality, it has to have a soul," Hoffer argues. "It has to be able to distinguish between right and wrong, and good and bad, and make moral judgments. My daughter has known the difference between right and wrong since she was 3, and until you have a bot on the same level as a 3-year-old, you're not going to have a popular bot."

In 2006, Microsoft bought ActiveBuddy, the creator of SmarterChild, and worked to monetize the bot. In the process, Hoffer says they "lobotomized" him, smoothing over the rough edges of his personality. Eventually, SmarterChild was decommissioned.

Shay Maunz is a freelance writer in Brooklyn. She's on Twitter @shaymaunz .

Copyright 2020 NPR. To see more, visit https://www.npr.org. 9(MDAwMTM1NDgzMDEyMzg2MDcwMzJjODJiYQ004))